Difference between revisions of "ALPHA-One"

Jump to navigation

Jump to search

| Line 28: | Line 28: | ||

* 32GB 64bits LPDDR5@6400MHz RAM Memory. | * 32GB 64bits LPDDR5@6400MHz RAM Memory. | ||

* 64GB eMMC preintsalled with 7b Deepseek/Qwen LLM | * 64GB eMMC preintsalled with 7b Deepseek/Qwen LLM | ||

=== Case === | |||

* CNC from single Aluminum block | |||

* Passive cooling, built-in heat pipe that directly contact with SoC | |||

=== Network === | === Network === | ||

| Line 44: | Line 48: | ||

== Potential Improvement == | |||

* Current 7bLLM throughput is around 3.5 tokens/second using docker form, potential improve upto 10 tokens/second when operates LLM in native mode. | |||

* Potential increase LLM capability from 7b to 15b with system software optimization | |||

* If ALPHA-One proven its potential, consider release ALPHA-Two which can host up to 30b LLM | |||

Revision as of 16:28, 12 May 2025

The ALPHA-One is a Generative 7b LLM AI Agent based on StarPro64 board RISC-V based Single Board Computer with 20TOPS(int 8) NPU capability, and preinstalled 64G eMMC module contains 7b Deepseek/Owen LLM build in docker form.

SDK releases

- SDK document (some in Chinese): https://files.pine64.org/SDK/StarPro64/docs.7z

- SDK Release:

- LLM and tools release:

Main Board and SoC Specification

- Based on StarPro64 Single Board Computer wiki page

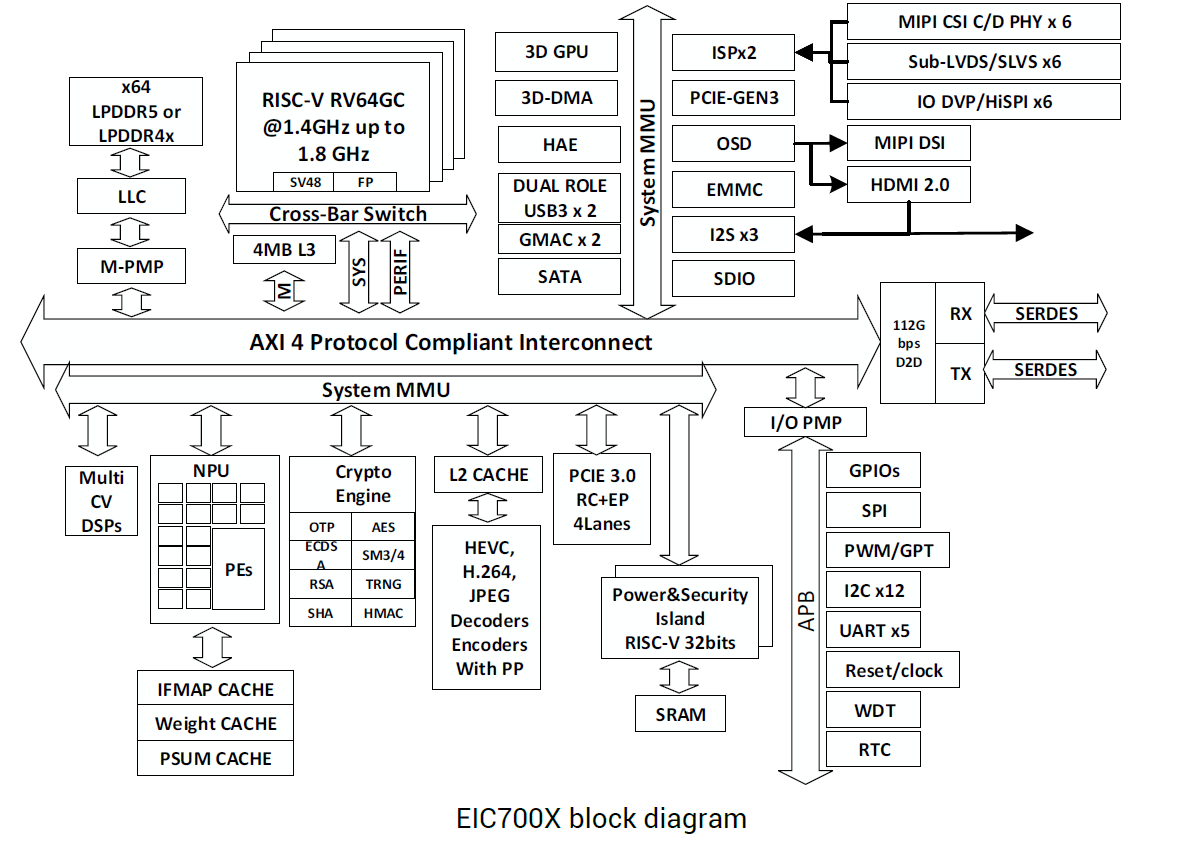

- Based on ESWIN EIC7700X SoC

Features

System Memory

- 32GB 64bits LPDDR5@6400MHz RAM Memory.

- 64GB eMMC preintsalled with 7b Deepseek/Qwen LLM

Case

- CNC from single Aluminum block

- Passive cooling, built-in heat pipe that directly contact with SoC

Network

- Dual 10/100/1000Mbps Ethernet

- 2.4GHz/5Ghz MIMO WiFi 802.11 b/g/n/ac/ax with Bluetooth 5.3

Expansion Ports

- 2× USB3.0 Dedicated Host port

- 2× USB2.0 Shared Host port

- Digital Video output up to 4K@60Hz

- 3.5mm audio Jack with mic in

Certifications

- Not yet available

Potential Improvement

- Current 7bLLM throughput is around 3.5 tokens/second using docker form, potential improve upto 10 tokens/second when operates LLM in native mode.

- Potential increase LLM capability from 7b to 15b with system software optimization

- If ALPHA-One proven its potential, consider release ALPHA-Two which can host up to 30b LLM