PineCube

Specifications

- Dimensions: 55mm x 51mm x 51.5mm

- Weight: 55g

- Storage:

- MicroSD slot, bootable

- 128Mb SPI Nor Flash, bootable

- Cameras: OV5640, 5Mpx

- CPU: Allwinner(Sochip) ARM Cortex-A7 MPCore, 800MHz

- RAM: 128MB DDR3

- I/O:

- 10/100Mbps Ethernet with passive PoE

- USB 2.0 A host

- 26 pins GPIO port

- 2x 3.3V Ouptut

- 2x 5V Output

- 1x I2C

- 2x UART

- 2x PWM

- 1x SPI

- 1x eMMC/SDIO/SD (8-bit)

- 6x Interrupts

- Note: Interfaces are multiplexed, so they can't be all used at same time

- Internal microphone

- Network:

- WiFi

- Screen: optional 4.5" RGB LCD screen

- Misc. features:

- Volume and home buttons

- Speakers and Microphone

- Power DC in:

- 5V 1A from MicroUSB Port or GPIO port

- 4V-18V from Ethernet passive PoE

- Battery: optional 950-1600mAh model: 903048 Lithium Polymer Ion Battery Pack, can be purchase at Amazon.com

PineCube board information, schematics and certifications

- PineCube mainboard schematic:

- PineCube faceboard schematic:

- PineCube certifications:

Datasheets for components and peripherals

- Allwinner (Sochip) S3 SoC information:

- X-Powers AXP209 PMU (Power Management Unit) information:

- CMOS camera module information:

- LCD touch screen panel information:

- Lithium battery information:

- WiFi/BT module information:

- Case information:

Operating Systems

Mainlining Efforts

Please note:

- this list is most likely not complete

- no review of functionality is done here, it only serves as a collection of efforts

| Linux kernel | ||

|---|---|---|

| Type | Link | Available in version |

| Devicetree Entry Pinecube | https://lkml.org/lkml/2020/9/22/1241 | 5.10 |

| Correction for AXP209 driver | https://lkml.org/lkml/2020/9/22/1243 | 5.9 |

| Additional Fixes for AXP209 driver | https://lore.kernel.org/lkml/20201031182137.1879521-8-contact@paulk.fr/ | tdb (5.11?) |

| Device Tree Fixes | https://lore.kernel.org/lkml/20201003234842.1121077-1-icenowy@aosc.io/ | 5.10 |

| U-boot | ||

| Type | Link | Available in version |

| PineCube Board Support | https://patchwork.ozlabs.org/project/uboot/list/?series=210044 | expected in v2021.01 |

| Buildroot | ||

| No known mainlining efforts yet | ||

NixOS

Buildroot

Elimo Engineering integrated support for the PineCube into Buildroot.

This has not been merged into upstream Buildroot yet, but you can find the repo on Elimo's GitHub account and build instructions in the board support directory readme. The most important thing that this provides is support for the S3's DDR3 in u-boot. Unfortunately mainline u-boot does not have that yet, but the u-boot patches from Daniel Fullmer's NixOS repo were easy enough to use on buildroot. This should get you a functional system that boots to a console on UART0. It's pretty fast too, getting there in 1.5 seconds from u-boot to login prompt.

Armbian

The only Armbian release with support for Ethernet and the camera module is the Ubuntu Groovy release. The Ubuntu Groovy release is an experimental, automatically generated release and it appears to support additional hardware from the other Armbian releases.

Armbian Build Image with motion [microSD Boot] [20201222]

- Armbian Ubuntu Focal build for the Pinecube with the motion (detection) package preinstalled.

- There are 2 ways to interact with the OS:

- Scan for the the device IP (with hostname pinecube)

- Use the PINE64 USB SERIAL CONSOLE/PROGRAMMER to login to the serial console, then check for assigned IP

- DD image (for 8GB micoSD card and above)

- Direct download from pine64.org

- MD5 (XZip file): 61e5a6d3ab0f74ce8367c97b7f8cbb7b

- File Size: 328MGB

- Direct download from pine64.org

GitHub gist for the userpatch which pre-installs and configures the motion (detection) package.

Armbian Builds for PineCube are available for download, once again thanks to the work of Icenowy Zheng. Although not officially supported it enables the usage of Debian and Ubuntu.

A serial console can be established with 152008N1 (no hardware flow control). Login credentials are as usual in Armbian (login: root, password: 1234).

Motion daemon can be enabled using systemctl (With root) systemctl enable motion. Then just reboot.

Serial connection using screen and the woodpecker USB serial device

First connect the woodpecker USB serial device to the PineCube. Pin 1 on the PineCube has a small white dot on the PCB - this should be directly next to the microusb power connection. Attach the GND pin on the woodpecker to pin 6 (GND) on the PineCube, TXD from the woodpecker to pin 10 (UART_RXD) on the PineCube, and RXD from the woodpecker to pin 8 (UART_TXD) on the PineCube.

On the host system which has the woodpecker USB serial device attached, it is possible to run screen and to communicate directly with the PineCube:

screen /dev/ttyUSB0 115200

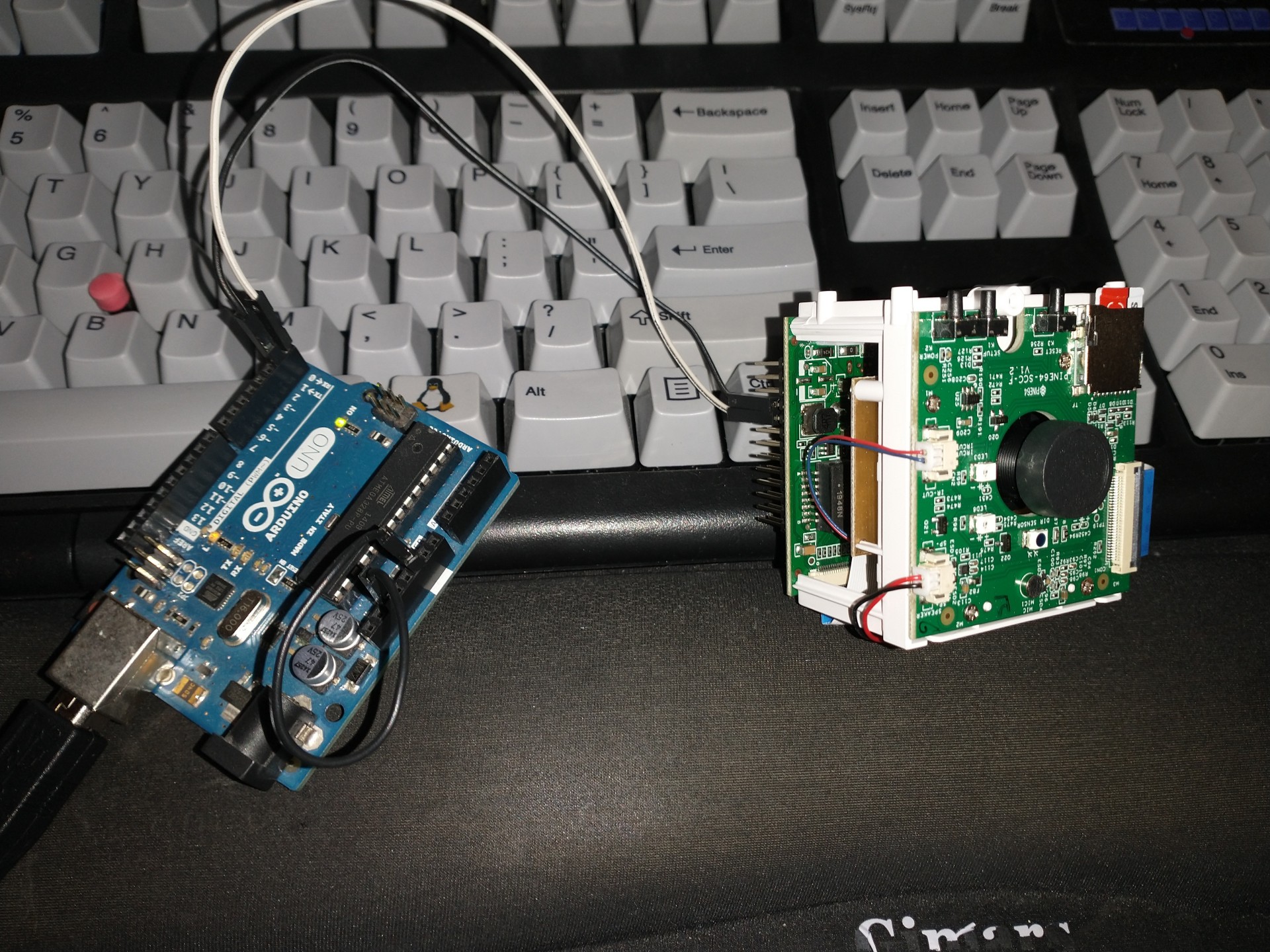

Serial connection using screen and Arduino Uno

You can use the Arduino Uno or other Arduino boards as a USB serial device.

First you must either remove the microcontroller from it's socket, or if your Arduino board does not allow this, then you can use wires to jump RESET (RST) and GND to isolate the SOC.

After this you can either use the Arduino IDE and it's Serial monitor after selecting your /dev/ttyACMx Arduino device, or screen:

screen /dev/ttyACM0 115200

Serial connection using pinephone/pinebook pro serial debugging cable

You can use the serial console USB cable for pinephone and pinebook pro at the store. With a female terminal block wire using breadboard wire into the GPIO block at the following locations in a "null modem" configuration with transmit and receive crossed between your computer and the pinecube:

S - Ground (to pin 9) R - Transmit (to pin 8) T - Receive (to pin 10)

From Linux you can access the console of the pinecube using the screen command:

screen /dev/ttyUSB0 115200

Basic bandwidth tests with iperf3

Install armbian-config:

apt install armbian-config

Enable iperf3 through the menu in armbian-config:

armbian-config

On a test computer on the same network segment run iperf3 as a client:

iperf3 -c pinecube -t 60

The same test computer, run iperf3 in the reverse direction:

iperf3 -c pinecube -t 60 -R

Performance results

Wireless network performance

The performance results reflect using the wireless network. The link speed was 72.2Mb/s using 2.462Ghz wireless. Running sixty second iperf3 tests: the observed throughput varies between 28-50Mb/s to a host on the same network segment. The testing host is connected to an Ethernet switch which is also connected to a wireless bridge. The wireless network uses WPA2 and the PineCube is connected to this wireless network bridge.

Client rate for sixty seconds:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.00 sec 293 MBytes 41.0 Mbits/sec 1 sender [ 5] 0.00-60.01 sec 291 MBytes 40.7 Mbits/sec receiver

Client rate with -R for sixty seconds:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.85 sec 263 MBytes 36.2 Mbits/sec 3 sender [ 5] 0.00-60.00 sec 259 MBytes 36.1 Mbits/sec receiver

Using WireGuard to protect the traffic between the PineCube and the test system, the performance characteristics change only slightly.

Client rate for sixty seconds with WireGuard:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.00 sec 230 MBytes 32.1 Mbits/sec 0 sender [ 5] 0.00-60.09 sec 229 MBytes 32.0 Mbits/sec receiver

Client rate with -R for sixty seconds with WireGuard:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.14 sec 246 MBytes 34.3 Mbits/sec 7 sender [ 5] 0.00-60.00 sec 245 MBytes 34.2 Mbits/sec receiver

Wired network performance

The Ethernet network does not work in the current Ubuntu Focal Armbian image or the Ubuntu Groovy Armbian image.

The performance results reflect using the Ethernet network. The link speed was 100Mb/s using a 1000Mb/s prosumer switch. Running sixty second iperf3 tests: the observed throughput varies between 92-102Mb/s to a host on the same network segment. The testing host is connected to the same Ethernet switch which is also connected to the PineCube.

Client rate for sixty seconds:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.00 sec 675 MBytes 94.4 Mbits/sec 0 sender [ 5] 0.00-60.01 sec 673 MBytes 94.0 Mbits/sec receiver

Client rate with -R for sixty seconds:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.00 sec 673 MBytes 94.1 Mbits/sec 0 sender [ 5] 0.00-60.00 sec 673 MBytes 94.1 Mbits/sec receiver

Using WireGuard to protect the traffic between the PineCube and the test system, the performance characteristics change only slightly.

Client rate for sixty seconds with WireGuard:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.00 sec 510 MBytes 71.2 Mbits/sec 0 sender [ 5] 0.00-60.01 sec 509 MBytes 71.1 Mbits/sec receiver

Client rate with -R for sixty seconds with WireGuard:

[ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.01 sec 642 MBytes 89.8 Mbits/sec 0 sender [ 5] 0.00-60.00 sec 641 MBytes 89.7 Mbits/sec receiver

Streaming the camera to the network

In this section we document a variety of ways to stream video to the network from the PineCube. Unless specified otherwise, all of these examples have been tested on Ubuntu groovy (20.10). See this small project for the pinecube for easy to use programs tuned for the PineCube.

In the examples which use h264, we are currently encoding using the x264 library which is not very fast on this CPU. The SoC in the PineCube does have a hardware h264 encoder, which the authors of these examples have so far not tried to use. It appears that https://github.com/gtalusan/gst-plugin-cedar might provide easy access to it, however. Please update this wiki if you find out how to use the hardware encoder!

gstreamer: h264 HLS

HLS (HTTP Live Streaming) has the advantage that it is easy to play in any modern web browser, including Android and iPhone devices, and that it is easy to put an HTTP caching proxy in front of it to scale to many viewers. It has the disadvantages of adding (at minimum) several seconds of latency, and of requiring an h264 encoder (which we have in hardware, but haven't figured out how to use yet, so, we're stuck with the slow software one).

HLS segments a video stream into small chunks which are stored as .ts (MPEG Transport Stream) files, and (re)writes a playlist.m3u8 file which clients constantly refresh to discover which .ts files they should download. We use a tmpfs file system to avoid needing to write these files to the sdcard in the PineCube. Besides the program which writes the .ts and .m3u8 files (gst-launch-1.0, in our case), we'll also need a very basic web page in tmpfs and a webserver to serve the files.

Create an hls directory to be shared in the existing tmpfs file system that is mounted at /dev/shm:

mkdir /dev/shm/hls/

Create an index.html and optionally a favicon.ico or even a set of icons, and then put the files into the /dev/shm/hls directory. An example index.html that works is available in the Getting Started section of the README for hls.js. We recommend downloading the hls.js file and editing the example index.html to serve your local copy of it instead of fetching it from a CDN. This file provides HLS playback capabilities in browsers which don't natively support it (which is most browsers aside from the iPhone).

In one terminal, run the camera capture pipeline:

cd /dev/shm/hls/ &&

media-ctl --set-v4l2 '"ov5640 1-003c":0[fmt:UYVY8_2X8/240x320@1/15]' &&

gst-launch-1.0 v4l2src ! video/x-raw,width=320,height=240,format=UYVY,framerate=15/1 ! decodebin ! videoconvert ! video/x-raw,format=I420 ! clockoverlay ! timeoverlay valignment=bottom ! x264enc speed-preset=ultrafast tune=zerolatency ! mpegtsmux ! hlssink target-duration=1 playlist-length=2 max-files=3

Alternatively it is possible to capture at a higher resolution:

media-ctl --set-v4l2 '"ov5640 1-003c":0[fmt:UYVY8_2X8/1920x1080@1/15]'

cd /dev/shm/hls/ && gst-launch-1.0 v4l2src ! video/x-raw,width=1920,height=1080,format=UYVY,framerate=15/1 ! decodebin ! videoconvert ! video/x-raw,format=I420 ! clockoverlay ! timeoverlay valignment=bottom ! x264enc speed-preset=ultrafast tune=zerolatency ! mpegtsmux ! hlssink target-duration=1 playlist-length=2 max-files=3

In another, run a simple single threaded webserver which will serve html, javascript, and HLS to web clients:

cd /dev/shm/hls/ && python3 -m http.server

Alternately, install a more efficient web server (apt install nginx) and set the server root for the default configuration to be /dev/shm/hls. This will run on port 80 rather than the python3 server which defaults to port 8000.

It should be possible to view the HLS stream directly in a web browser by visiting http://pinecube:8000/ if pinecube is the correct hostname and the name correctly resolves.

You can also view the HLS stream with VLC: vlc http://pinecube:8000/playlist.m3u8

Or with gst-play-1.0: gst-play-1.0 http://pinecube:8000/playlist.m3u8 (or with mpv, ffplay, etc)

To find out about other options you can configure in the hlssink gstreamer element, you can run gst-inspect-1.0 hlssink.

It is worth noting here that the hlssink element in GStreamer is not widely used in production environments. It is handy for testing, but for real-world free-software HLS live streaming deployments the standard tool today (January 2021) is nginx's RTMP module which can be used with ffmpeg to produce "adaptive streams" which are reencoded at varying quality levels. You can send data to an nginx-rtmp server from a gstreamer pipeline using the rtmpsink element. It is also worth noting that gstreamer has a new hlssink2 element which we have not tested; perhaps in the future it will even have a webserver!

v4l2rtspserver: h264 RTSP

Install dependencies:

apt install -y cmake gstreamer1.0-plugins-bad gstreamer1.0-tools \

gstreamer1.0-plugins-good v4l-utils gstreamer1.0-alsa alsa-utils libpango1.0-0 \

libpango1.0-dev gstreamer1.0-plugins-base gstreamer1.0-x x264 \

gstreamer1.0-plugins-{good,bad,ugly} liblivemedia-dev liblog4cpp5-dev \

libasound2-dev vlc libssl-dev iotop libasound2-dev liblog4cpp5-dev \

liblivemedia-dev autoconf automake libtool v4l2loopback-dkms liblog4cpp5-dev \

libvpx-dev libx264-dev libjpeg-dev libx265-dev linux-headers-dev-sunxi;

Install kernel source and build v4l2loopback module:

apt install linux-source-5.11.3-dev-sunxi64 #Adjust kernel version number to match current installation with "uname -r" cd /usr/src/v4l2loopback-0.12.3; make && make install && depmod -a

Build required v4l2 software:

git clone --recursive https://github.com/mpromonet/v4l2tools && cd v4l2tools && make && make install; git clone --recursive https://github.com/mpromonet/v4l2rtspserver && cd v4l2rtspserver && cmake -D LIVE555URL=https://download.videolan.org/pub/contrib/live555/live.2020.08.19.tar.gz . && make && make install;

Running the camera:

media-ctl --set-v4l2 '"ov5640 1-003c":0[fmt:UYVY8_2X8/640x480@1/30]'; modprobe v4l2loopback video_nr=10 debug=2; v4l2compress -fH264 -w -vv /dev/video0 /dev/video10 & v4l2rtspserver -v -S -W 640 -H 480 -F 10 -b /usr/local/share/v4l2rtspserver/ /dev/video10

Note that you might get an error when running media-ctl indicating that the resource is busy. This could be because of the motion program that runs on the stock OS installation. Check and kill any running /usr/bin/motion processes before running the above steps.

The v4l2compress/v4l2rtspserver method of streaming the camera uses around ~45-50% of the CPU for compression of the stream into H264 (640x480@7fps) and around 1-2% of the CPU for serving the HLS stream. Total system RAM used is roughly 64MB and the load average is ~0.4-~0.5 when idle, and ~0.51-~0.60 with one HLS client streaming the camera.

You'll probably see about a 2-3s lag with this approach, possibly due to the H264 compression and the lack of hardware acceleration at the moment.

gstreamer: JPEG RTSP

GStreamer's RTSP server isn't an element you can use with gst-launch, but rather a library. We failed to build its example program, so instead used this very small 3rd party tool which is based on it: https://github.com/sfalexrog/gst-rtsp-launch/

After building gst-rtsp-launch (which is relatively simple on Ubuntu groovy; just apt install libgstreamer1.0-dev libgstrtspserver-1.0-dev first), you can read JPEG data directly from the camera and stream it via RTSP: media-ctl --set-v4l2 '"ov5640 1-003c":0[fmt:JPEG_1X8/1280x720]' && gst-rtsp-launch 'v4l2src ! image/jpeg,width=1280,height=720 ! rtpjpegpay name=pay0'

This stream can be played using vlc rtsp://pinecube.local:8554/video or mpv, ffmpeg, gst-play-1.0, etc. If you increase the resolution to 1920x1080, mpv and gst-play can still play it, but VLC will complain The total received frame size exceeds the client's buffer size (2000000). 73602 bytes of trailing data will be dropped! if you don't tell it to increase its buffer size with --rtsp-frame-buffer-size=300000.

gstreamer: h264 RTSP

Left as an exercise to the reader (please update the wiki). Hint: involves bits from the HLS and the JPEG RTSP examples above, but needs a rtph264pay name=pay0 element.

gstreamer: JPEG RTP UDP

Configure camera: media-ctl --set-v4l2 '"ov5640 1-003c":0[fmt:JPEG_1X8/1920x1080]'

Transmit with: gst-launch-1.0 v4l2src ! image/jpeg,width=1920,height=1080 ! rtpjpegpay name=pay0 ! udpsink host=$client_ip port=8000

Receive with: gst-launch-1.0 udpsrc port=8000 ! application/x-rtp, encoding-name=JPEG,payload=26 ! rtpjpegdepay ! jpegdec ! autovideosink

Note that the sender must specify the recipient's IP address in place of $client_ip; this can actually be a multicast address allowing for many receivers! (You'll need to specify a valid multicast address in the receivers' pipeline also; see gst-inspect-1.0 udpsrc and gst-inspect-1.0 udpsink for details.)

gstreamer: JPEG RTP TCP

Configure camera: media-ctl --set-v4l2 '"ov5640 1-003c":0[fmt:JPEG_1X8/1920x1080]'

Transmit with: gst-launch-1.0 v4l2src ! image/jpeg,width=1920,height=1080 ! rtpjpegpay name=pay0 ! rtpstreampay ! tcpserversink host=0.0.0.0 port=1234

Receive with: gst-launch-1.0 tcpclientsrc host=pinecube.local port=1234 ! application/x-rtp-stream,encoding-name=JPEG ! rtpstreamdepay ! application/x-rtp, media=video, encoding-name=JPEG ! rtpjpegdepay ! jpegdec ! autovideosink

gstreamer and socat: MJPEG HTTP server

This rather ridiculous method uses bash, socat, and gstreamer to implement an HTTP-ish server which will serve your video as an MJPEG stream which is playable in browsers.

This approach has the advantage of being relatively low latency (under a second), browser-compatible, and not needing to reencode anything on the CPU (it gets JPEG data from the camera itself). Compared to HLS, it has the disadvantages that MJPEG requires more bandwidth than h264 for similar quality, pause and seek are not possible, stalled connections cannot jump ahead when they are unstalled, and, in the case of this primitive implementation, it only supports one viewer at a time. (Though, really, the RTSP examples on this page perform very poorly with multiple viewers, so...)

Gstreamer can almost do this by itself, as it has a multipartmux element which produces the headers which precede each frame. But sadly, despite various forum posts lamenting the lack of one over the last 12+ years, as of the end of the 50th year of the UNIX era (aka 2020), somehow nobody has yet gotten a webserver element merged in to gstreamer (which is necessary to produce the HTTP response, which is required for browsers other than firefox to play it). So, here is an absolutely minimal "webserver" which will get MJPEG displaying in a (single) browser.

Create a file called mjpeg-response.sh:

#!/bin/bash media-ctl --set-v4l2 '"ov5640 1-003c":0[fmt:JPEG_1X8/1920x1080]' b="--duct_tape_boundary" echo -en "HTTP/1.1 200 OK\r\nContent-type: multipart/x-mixed-replace;boundary=$b\r\n\r\n" gst-launch-1.0 v4l2src ! image/jpeg,width=1920,height=1080 ! multipartmux boundary=$b ! fdsink fd=2 2>&1 >/dev/null

Make it executable: chmod +x mjpeg-response.sh

Run the server: socat TCP-LISTEN:8080,reuseaddr,fork EXEC:./mjpeg-response.sh

And browse to http://pinecube.local:8080/ in your browser.

Debugging camera issues with the gstreamer pipeline

If the camera does not appear to work, it is possible to change the v4l2src to videotestsrc and the gstreamer pipeline will produce a synthetic test image without using the camera hardware.

If the camera is only sensor noise lines over a black or white image, the camera may be in a broken state. When in that state, the following kernel messages were observed:

[ 1703.577304] alloc_contig_range: [46100, 464f5) PFNs busy [ 1703.578570] alloc_contig_range: [46200, 465f5) PFNs busy [ 1703.596924] alloc_contig_range: [46300, 466f5) PFNs busy [ 1703.598060] alloc_contig_range: [46400, 467f5) PFNs busy [ 1703.600480] alloc_contig_range: [46400, 468f5) PFNs busy [ 1703.601654] alloc_contig_range: [46600, 469f5) PFNs busy [ 1703.619165] alloc_contig_range: [46100, 464f5) PFNs busy [ 1703.619528] alloc_contig_range: [46200, 465f5) PFNs busy [ 1703.619857] alloc_contig_range: [46300, 466f5) PFNs busy [ 1703.641156] alloc_contig_range: [46100, 464f5) PFNs busy

SDK

Stock Linux

- Direct Download from pine64.org

- MD5 (7zip file): efac108dc98efa0a1f5e77660ba375f8

- File Size: 3.50GB

How to compile

You can either setup a machine for the build environment, or use a Vagrant virtual machine provided by Elimo Engineering

On a dedicated machine

Recommended system requirements:

- OS: (L)Ubuntu 16.04

- CPU: 64-bit based

- Memory: 8 GB or higher

- Disk: 15 GB free hard disk space

Install required packages

sudo apt-get install p7zip-full git make u-boot-tools libxml2-utils bison build-essential gcc-arm-linux-gnueabi g++-arm-linux-gnueabi zlib1g-dev gcc-multilib g++-multilib libc6-dev-i386 lib32z1-dev

Install older Make 3.82 and Java JDK 6

pushd /tmp wget https://ftp.gnu.org/gnu/make/make-3.82.tar.gz tar xfv make-3.82.tar.gz cd make-3.82 ./configure make sudo apt purge -y make sudo ./make install cd .. # Please, download jdk-6u45-linux-x64.bin from https://www.oracle.com/java/technologies/javase-java-archive-javase6-downloads.html (requires free login) chmod +x jdk-6u45-linux-x64.bin ./jdk-6u45-linux-x64.bin sudo mkdir /opt/java/ sudo mv jdk1.6.0_45/ /opt/java/ sudo update-alternatives --install /usr/bin/javac javac /opt/java/jdk1.6.0_45/bin/javac 1 sudo update-alternatives --install /usr/bin/java java /opt/java/jdk1.6.0_45/bin/java 1 sudo update-alternatives --install /usr/bin/javaws javaws /opt/java/jdk1.6.0_45/bin/javaws 1 sudo update-alternatives --config javac sudo update-alternatives --config java sudo update-alternatives --config javaws popd

Unpack SDK and then compile and pack the image

7z x 'PineCube Stock BSP-SDK ver1.0.7z' mv 'PineCube Stock BSP-SDK ver1.0' pinecube-sdk cd pinecube-sdk/camdroid source build/envsetup.sh lunch mklichee make -j3 pack

Using Vagrant

You can avoid setting up your machine and just use Vagrant to spin up a development environment in a VM.

Just clone the Elimo Engineering repo and follow the instructions in the readme file

After spinning up the VM, you just need to run the build:

cd pinecube-sdk/camdroid source build/envsetup.sh lunch mklichee make -j3 pack

Community Projects

Share your project with a PineCube here!